Beyond Prompting: Building a Data Literate AI Partner

Your AI has your data but not your context, here's what to do about it.

As part of my year-end review, I asked Claude to analyze why my newsletter was growing.

Number of subscriber was up 47%. Engagement rate steady at 8-10%. Paid conversions increased by 28%.

The AI’s analysis:

“Your content strategy is working. Engagement metrics show strong audience resonance. Continue current approach.”

But I knew something the AI didn’t: those numbers had almost nothing to do with my content strategy.

My growth came from one viral post about NotebookLM that hit at the perfect moment. I also wrote a guest post for Michael Spencer covering my in-depth NotebookLM analysis. The “strategic content pillars” I’d been carefully crafting for months showed decent result, but not what was actually driving new subscribers exponentially.

The AI had all my data. It just didn’t understand my whole newsletter growth context.

Today’s guest post is from Hodman Murad, who writes The Data Letter—a newsletter where she dissects AI and data failures, from hallucinated growth metrics to optimized-away customers.

She’s also the founder of Asaura AI, where she’s building AI for executive function. She writes about the intersection of AI, productivity, and mental health at her upcoming Substack, Asaura (launching January 2026). When she’s not debugging data pipelines or AI systems, she’s probably explaining why your single source of truth is actually three conflicting dashboards.

If you’re curious about what she writes in her newsletter, you might want to check out her latest posts:

Hodman has solved a problem I see constantly: AI tools that can crunch numbers but can’t think strategically. They see great metrics but miss the actual drivers behind them. They can’t tell you which of your efforts are working and which are just along for the ride.

What’s failing here is our onboarding, not the AI.

So, she developed a three-step framework that transforms AI from a generic assistant into a strategic partner who actually understands your context. Instead of correcting the same misunderstandings in every conversation, you teach your AI once—properly—and it starts anticipating what you need, catching mistakes you’d catch, and asking questions you’d ask.

If you’ve ever gotten technically accurate but strategically blind answers from AI, this framework will change how you collaborate.

Here’s Hodman.

Hello, Hodman here 👋🏻

Your AI assistant has access to your data, but it doesn’t understand your business. You ask a nuanced question about customer behavior, and it returns technically accurate numbers wrapped in generic advice. The analysis checks out mathematically, but it completely misses what matters to your business.

The limitation lies in the AI context.

I call this the Context Ceiling: the invisible barrier where your AI has access to all your data but understands none of your reality. It sees ‘conversion rate: 3.2%,’ but doesn’t know whether that’s strong or alarming for your business. It can calculate customer lifetime value, but doesn’t understand that Enterprise customers require a completely different strategy than SMB customers, even when the numbers look similar.

AI can learn these distinctions. The challenge is that we’ve been treating AI like a search engine when we need to treat it like a new analyst joining our team. New analysts need proper onboarding, not just database access.

After years of working as a data scientist and training countless colleagues on analytics, I’ve discovered that the same principles that make human analysts effective apply directly to AI collaboration. The solution is a three-step cycle that transforms your AI from a generic assistant into a knowledgeable partner who actually understands your context. The solution comes from systematically teaching your AI the vocabulary, boundaries, and logic of your specific work, not from writing better prompts.

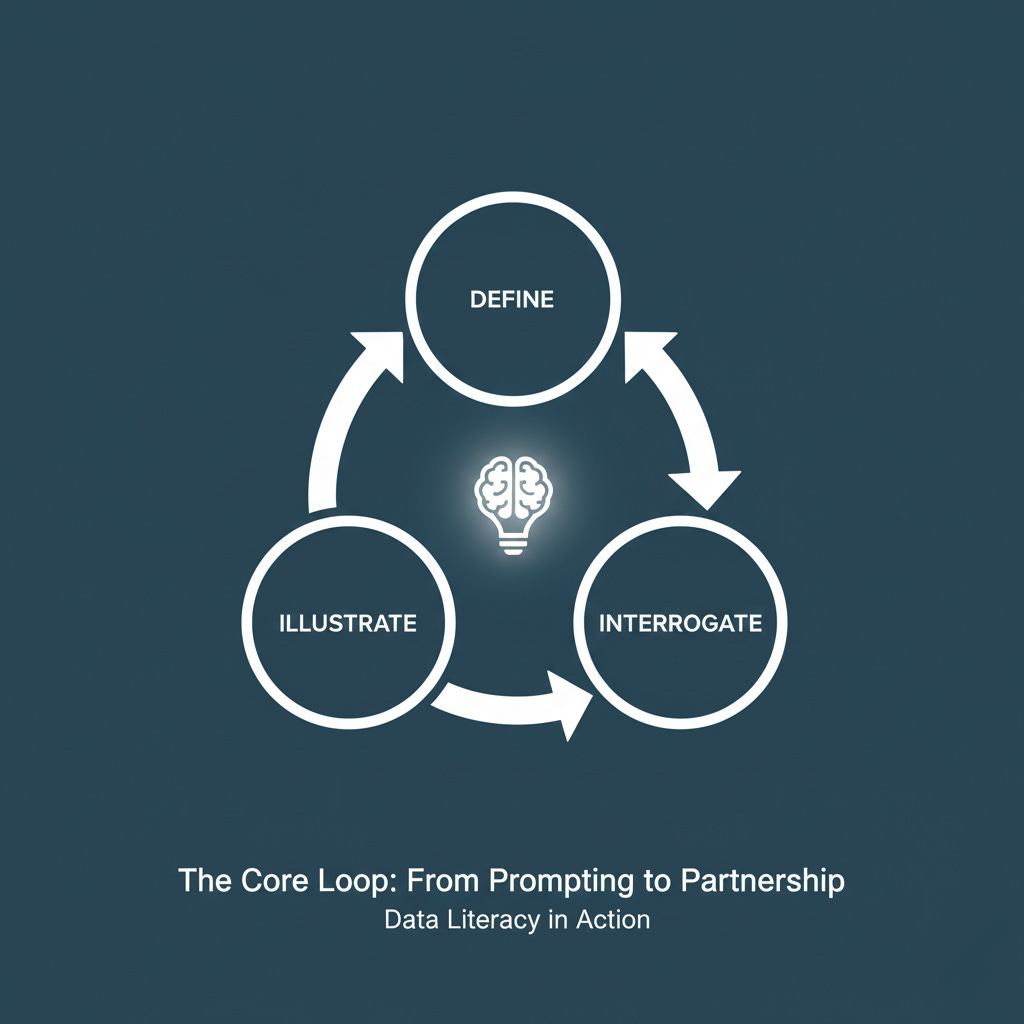

Introducing The Data Literacy Loop

The loop is a three-step cycle that transforms your AI from a generic assistant into a knowledgeable partner who understands your context.

This framework has dramatically reduced the time I spend explaining and re-explaining the same concepts in every conversation. Instead of correcting my AI’s misunderstandings in every conversation, I invest time once to teach it properly. The result is an AI partner that anticipates the nuances I care about, catches the mistakes I would see, and asks the questions I would ask.

A downloadable one-page version of this framework is available at the end of this article. Consider it your quick-reference guide for implementing this approach immediately.

Here’s how each step works with real examples from my work:

Step 1: Define (Create a Shared Vocabulary)

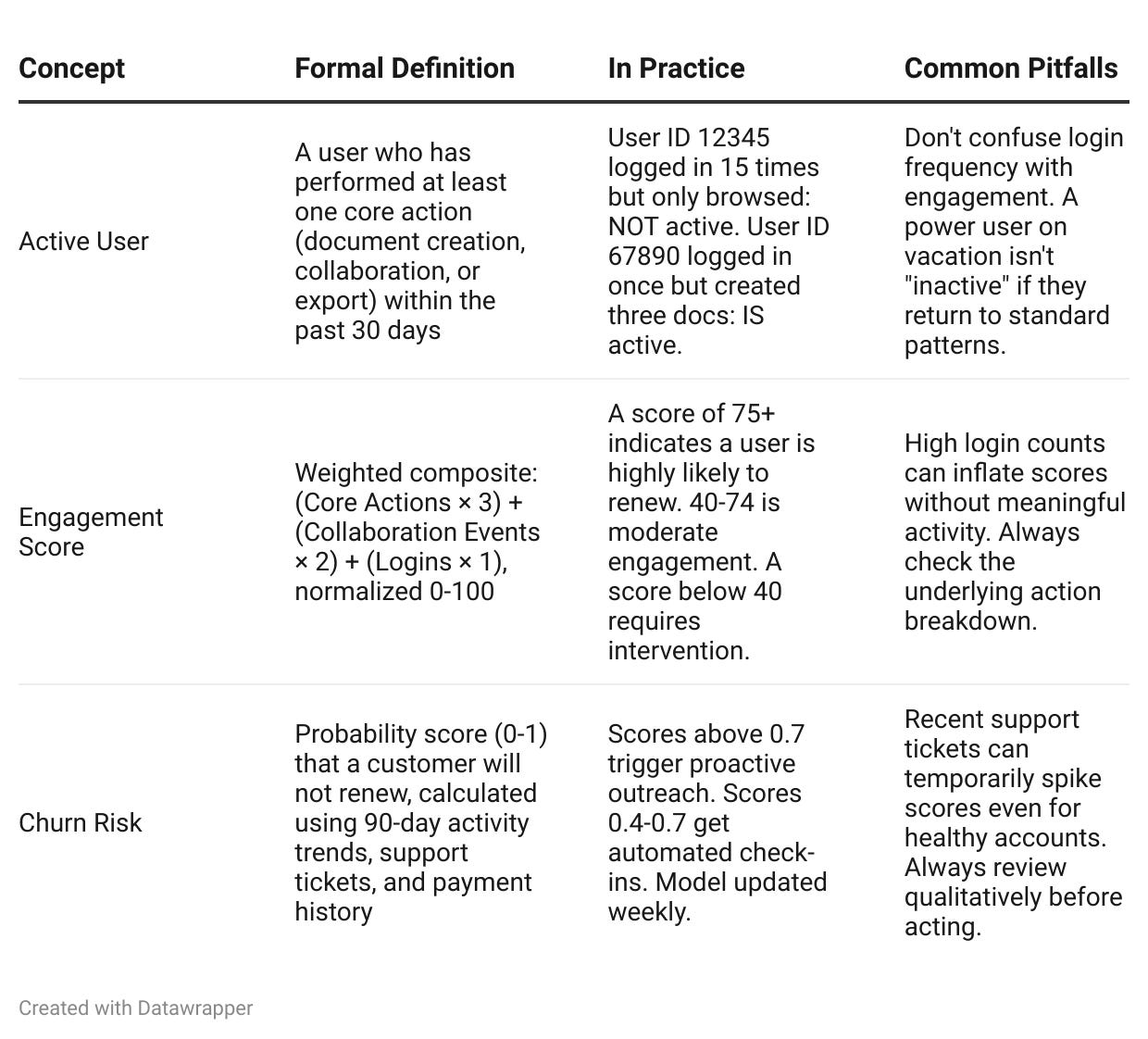

The first step is the most overlooked: creating a Master Definition List for your key concepts. Not the textbook definition, but the working definition that reflects how these terms actually function in your world.

When I was analyzing engagement for a SaaS product, ‘Active User’ seemed straightforward until three different stakeholders gave me three different definitions.

Marketing counted anyone who logged in once per month.

Product wanted users who performed a core action.

Finance needed a definition that aligned with our revenue recognition policies.

None of them was wrong, but without clarity, every analysis became a debate over definitions rather than a decision.

The solution was to create a standardized format that captured the definition and its context.

But you don’t have to build these definitions from scratch.

Here’s a prompt to get started:

‘Help me create a Master Definition for [your concept]. I need:

1. A formal definition that includes the calculation or logic

2. A practical example showing when this concept applies

3. A practical example showing when it doesn’t apply

4. Common pitfalls or misinterpretations to watch for

Context about my business: [brief description of your industry/product].’Your AI will generate a first draft that you can refine based on your specific business reality.

Here’s what a proper Master Definition looks like:

Once you create this list, you feed it to your AI before any analysis. This becomes the foundational context that informs every subsequent conversation.

The power is in the specificity. Notice how each definition includes the formal logic, practical examples, and, most importantly, common pitfalls. This approach captures what the metric is and how it behaves in the wild.

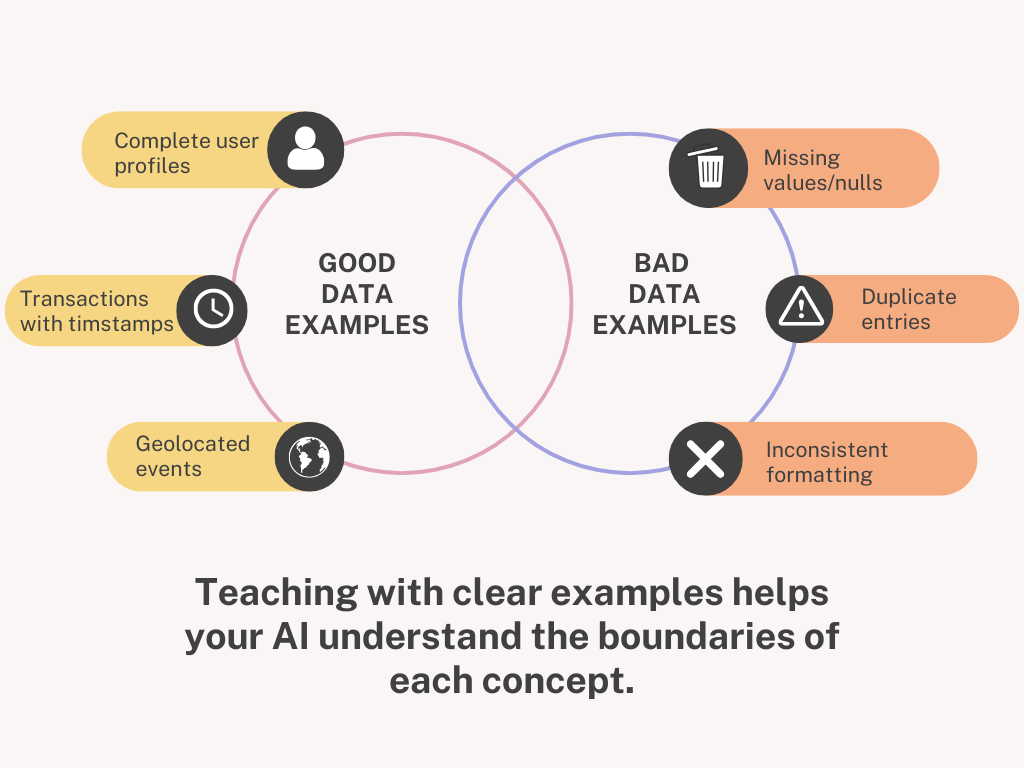

Step 2: Illustrate (Show, Don’t Just Tell)

Definitions alone aren’t enough. Just as you wouldn’t expect a new analyst to master your business from a glossary, your AI needs examples that demonstrate the boundaries and edge cases of each concept.

This is where most people stop too early. They provide one example and assume understanding, but in my experience training both humans and AI, learning happens most effectively in the contrast between what something is and what it isn’t.

If you’re struggling to identify strong examples, use this prompt:

‘Based on our definition of [Concept], help me create:

2 positive examples that clearly demonstrate this concept in action

- 2 negative examples that might look similar but don’t qualify

For each example, include:

- Specific metrics or behaviors

- Why it does or doesn’t meet our definition

- The business outcome (if relevant)

Here’s our definition: [paste your definition].’Then refine these AI-generated examples with real data from your business.

When teaching my AI about the Engagement Score, I provided the formula and real world scenarios:

Positive Examples (These ARE high engagement):

User A: 85 engagement score. Created 12 documents, collaborated on eight projects, logged in 20 times. Invited three teammates. Result: Renewed annual subscription early.

User B: 78 engagement score. Created only three documents, but all were heavily collaborated on (15+ collaborators each). Built templates used across the team. Result: Upgraded to the Enterprise plan.

Negative Examples (These are NOT high engagement despite appearing active):

User C: 45 engagement score despite 40 logins. All sessions are under 2 minutes. Zero document creation. Classic “tire-kicker” behavior. Result: Churned after trial.

User D: 35 engagement score. Created 10 documents but never returned after the first week. High initial burst, zero sustained engagement. Result: Dormant account.

The contrast makes the difference. Your AI learns that engagement depends on the pattern, not any single metric. User B had fewer documents than User D, but the quality and collaboration made all the difference.

This approach mirrors how I train junior data scientists: I show them successful analyses alongside the mistakes I made, the false positives I chased, and the apparent errors in retrospect. Your AI needs the same education.

Step 3: Interrogate (Stress Test the Understanding)

The final step is where you transform teaching into a partnership. Once you’ve defined your concepts and illustrated them with examples, you need to verify that your AI truly understands and can apply this knowledge independently.

I use three types of interrogation prompts, each designed to test a different level of understanding:

Level 1: Relationship Testing

‘Explain the relationship between Engagement Score and Churn Risk in our business. What patterns have you learned?’

This tests whether your AI can connect concepts logically. A good response will reference the examples you provided and draw appropriate conclusions. If it gives generic advice about engagement and churn that could apply to any business, you need more illustration.

Level 2: Risk Assessment

‘Based on our definition of Active User, what are the biggest risks to improving this metric? What behaviors might we inadvertently incentivize that would damage the business?’

This tests for nuance and systems thinking. Your AI should reference the ‘Common Pitfalls’ from your definitions and potentially identify new risks based on the examples you’ve shown. When I ran this test, my AI correctly identified that we could inflate Active User counts by making document creation artificially easy, which might increase the metric while decreasing document quality.

Level 3: Edge Case Classification

‘Does this scenario qualify as [your concept]? Why or why not?’

Scenario: A user logged in 25 times last month but only to check notifications. They created zero documents and collaborated on nothing.”

This tests boundary recognition. Your AI should be able to classify ambiguous cases using the logic you’ve taught it. The correct answer, based on our definition, is that this user is NOT active despite their high login frequency.

Here are ready-to-use prompt templates you can adapt:

1. “Given our definition of [Concept], analyze this scenario: [describe edge case].

Does it qualify? Why or why not? What additional information would help you decide?”

2. “Compare these two situations and tell me which demonstrates stronger [Concept]:

Situation A: [describe]

Situation B: [describe]

Explain your reasoning using our definitions.”

3. "What would be a red flag that we’re measuring [Concept] incorrectly?"

4. "What behaviors might game this metric?”The responses will tell you immediately whether your teaching has landed. Vague answers mean you need more examples. Overly confident answers that ignore your pitfalls mean you need to reinforce boundaries. Thoughtful answers that reference your specific context mean you’ve succeeded.

Putting It All Together: A Real-World Workflow

Here’s how this worked in practice with a real analysis I ran last quarter:

The Question: “Why did our Active User count increase by 15% but our Engagement Score only increased by 3%?”

Without the Data Literacy Loop, my AI would have given me generic possibilities: ‘Users might be logging in more but not engaging deeply’ or ‘Check your data for anomalies.’ Technically accurate, practically useless.

With the Data Literacy Loop:

Step 1 (Define): I had already taught my AI our specific definitions of Active User (at least one core action in 30 days) and Engagement Score (a weighted formula prioritizing creation and collaboration).

Step 2 (Illustrate): My AI had seen examples of “false activity”—high login counts without meaningful engagement—and understood this was a known pattern in our product.

Step 3 (Interrogate): When I asked the question, my AI immediately hypothesized: “This pattern suggests you have more users crossing the minimum threshold for Active (one core action) without sustained engagement. You may be seeing an influx of trial users or a successful activation campaign that’s getting users to first value, but not to habit formation.”

It then suggested:

“Segment your new Active Users by their first core action type. I suspect you’ll find document viewers increased while document creators stayed flat. Based on your examples, viewers rarely convert to engaged users.”

That analysis was correct. We ran a campaign encouraging users to “explore a sample document,” which drove up Active User counts but didn’t foster lasting engagement. Without the Data Literacy Loop, I would have spent hours exploring dead ends. With it, I could test a hypothesis in minutes.

This is the strategic dividend: AI that asks the right follow-up questions. AI that catches the patterns you’ve learned to watch for. AI that knows what “good” looks like in your specific context.

The Strategic Dividend

The Data Literacy Loop transforms your collaboration with AI, making it more efficient rather than simply making your AI smarter. Every hour you invest in teaching pays back tenfold in analysis that fits your reality.

The benefits compound:

Fewer correction cycles: Your AI gets it right the first time because it understands your context

Deeper insights: Your AI can spot patterns you’ve trained it to recognize, including ones you might miss

Faster onboarding: When your business evolves, updating your definitions is faster than re-explaining context in every conversation

Better questions: An educated AI asks clarifying questions that sharpen your own thinking

Most importantly, you shift from prompting for answers to building a genuine analytical partner. Your questions evolve from, ‘Can you analyze this data?’ to, ‘What should we be worried about here?’ The answers you get reflect your business logic, your historical patterns, and your strategic priorities.

The framework is simple, but the impact is transformational. Start with your three most important business concepts. Define them with specificity. Illustrate them thoroughly. Interrogate the understanding. Then watch as your AI goes from assistant to advisor.

Ready to implement this today? Download the one-page Data Literacy Loop framework below for a practical template you can fill out and use immediately with your AI assistant.

Absolutely nailed the concept, Hodman and Wyndo! We're writing better prompts when we should be onboarding AI like a new analyst with proper definitions, examples, and edge cases. Curious to try this framework myself!

What a collab 😍😍😍 Can't wait to try the workflow! Thank you :)