Prompt Engineering 101: Simple Steps to 10x Your AI Results

Stop getting mediocre results by working with AI.

"Write a blog post about productivity."

That was my first prompt to ChatGPT back in November 2022. What I got back was so generic it could have been copied from any mediocre self-help blog. Disappointed, I closed the tab and went back to writing everything myself, hunched at my desk in solitude.

Six months later, I tried again with a different approach:

"Write a blog post about productivity using the Pomodoro Technique. Target audience: burned-out tech workers. Tone: conversational but backed by research. Include 3 unexpected benefits and address common criticisms. Format: ~1000 words with H2 headings and bullet points for skimmability."

The difference was dramatic. Instead of AI-generated filler, I got something I could actually use with minimal editing. When I saw it, I couldn't unsee it.

What changed wasn't the AI—it was how I communicated with it.

This is prompt engineering: the art of extracting exactly what you need from AI tools by speaking their language. It's not about coding or technical jargon—it's about knowing how to give AI the context, constraints, and direction it needs to deliver remarkable results.

In this post, I'll share the practical prompt engineering techniques I've learned that have saved me countless hours on everything from drafting emails to researching complex topics to creating content for my business. You'll learn how to turn vague, disappointing AI outputs into response that satisfy your desire and feels like they were created by an expert who knows exactly what you need.

The best part? You don't need a technical background to master this—just a framework anyone can learn in minutes.

But first…

Why does AI often give generic, bland responses when you ask simple questions?

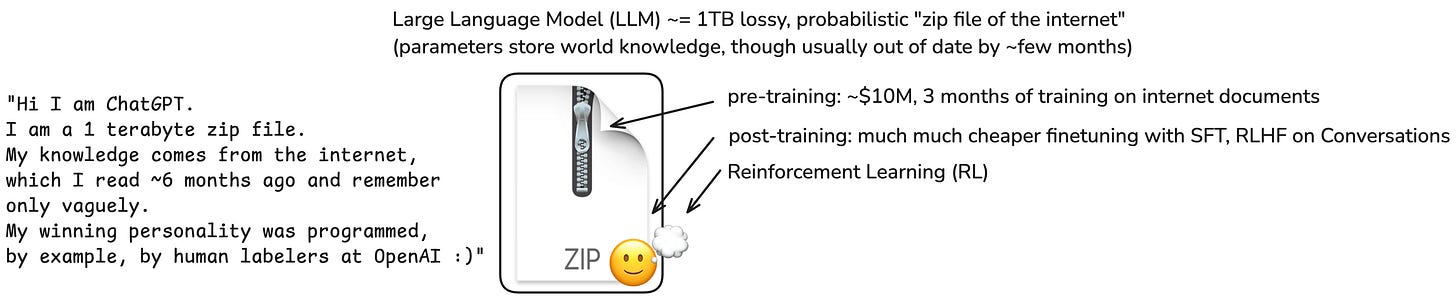

Simple answer: It's because of how these models work.

Complex answer: ChatGPT and similar AI don't "know" things the way humans do. They don't have clear, discrete knowledge like "Paris is the capital of France." Instead, they have statistical patterns derived from massive amounts of text.

Think of it like this: ChatGPT doesn't have a filing cabinet of facts. It has billions of fuzzy connections between words and concepts based on its pre and post training data. When you give it a vague prompt like "Write about leadership," it doesn't know which of those countless connections you want it to focus on.

Without specific guidance, the AI defaults to averaging everything it's seen about leadership across millions of articles, books, and forum posts. The result? The blandest, most generic take possible – the "statistical average" of all leadership content on the internet.

This is why specific prompts work better. When you add details about tone, audience, length, format, and examples, you're helping the AI narrow down which of those connections to prioritize. You're guiding it away from the vague average and toward the specific area you want to explore.

In other words:

Vague prompts get vague responses.

Specific prompts get specific responses.

The art of prompting: getting AI to deliver what you actually want

So how do we create these specific prompts? After hundreds of hours working with AI tools, I've developed a simple framework that works across any use case—whether you're drafting emails, generating marketing copy, planning a vacation itinerary, or researching a complex topic:

Context: AI has no idea who you are, what you need, or why you need it. Provide background information that a human would intuitively understand.

Clarity: Be specific about exactly what you want. Vague requests lead to vague outputs.

Constraints: Set boundaries and requirements. This prevents the AI from making assumptions and helps it prioritize what matters most.

Character: Specify the tone, style, and perspective you want the AI to adopt. This transforms generic content into something with personality.

Criteria: Tell the AI how to evaluate its own work. This creates a quality filter that improves outputs.

Let me show you how each of these principles transforms real-world results.

1. Context

❌ Before: "Write an email about the quarterly report."

✅ After: "Write an email about our Q1 2024 marketing report. I'm the marketing director sending this to our CEO who is concerned about our declining social media engagement. The report shows we've actually increased conversions despite lower engagement numbers."

🧠 Why it works: By providing context about your role, the recipient, their concerns, and the key insight from the report, you're giving the AI the background information it needs to create a relevant, targeted email rather than generic fluff.

2. Clarity

❌ Before: "Help me with a presentation."

✅ After: "Create an outline for a 10-slide presentation introducing our new customer service chatbot to our sales team. Include specific slides for: the problem it solves, key features, implementation timeline, and how it will affect sales workflows."

🧠 Why it works: The specific request defines exactly what you want (an outline, not the full slides), the exact topic, audience, length, and the required content sections.

3. Constraint

❌ Before: "Create a new mobile app logo."

✅ After: "Create a design brief for our new mobile app logo. Our brand colors are teal (#008080) and coral (#FF7F50). The app is a meditation timer for busy professionals. The logo should be minimalist, work at small sizes, and avoid clichéd wellness symbols like lotus flowers and Buddha statues."

🧠 Why it works: The constraint tells the AI exactly which direction not to pursue, resulting in more precise outputs that align with what you actually want.

4. Character

❌ Before: "Create a cold outreach message."

✅ After: "Create a cold outreach message to HR directors at enterprise companies. Write in the style of a curious consultant rather than a pushy salesperson. Use a conversational tone that respects their expertise and asks thoughtful questions about their current challenges before mentioning our solution."

🧠 Why it works: Character turns generic corporate content into something that feels authentic and human. It gives the AI a "voice" to write in rather than defaulting to bland corporate-speak.

5. Criteria

❌ Before: "Summarize this research paper on remote work productivity."

✅ After: "Summarize this research paper on remote work productivity. Focus on methodology and key findings. A good summary will explain the study design, highlight statistical significance of results, address limitations, and avoid overstating conclusions. Include 1-2 surprising insights that challenge common assumptions about remote work."

🧠 Why it works: By defining what makes a "good" summary, you're teaching the AI how to evaluate and improve its own output, leading to more nuanced, balanced, and insightful content.

And that's the secret sauce to better AI prompts.

But here's where things get really interesting. Once you've got those basics down, there's a whole other level to unlock.

The real magic happens when you stop treating AI like a fancy search engine and start treating it like a teammate. I'm not talking about extracting stuff from AI—I'm talking about actually working with it.

Most people get this totally wrong. They throw demands at AI like they're ordering at a drive-thru, then wonder why the results feel generic or off-target. But flip that approach on its head, and suddenly everything changes.

How to work with AI to 10x your output quality

Working with AI isn't about barking orders—it's about collaboration. We need to ditch this whole "extract value from AI" mindset and start thinking partnership.

Think about your best work experiences. When does a colleague actually help you do better work? When they understand what you're trying to accomplish, right? Not just what you're asking them to do.

Same goes for AI. When you help it understand your actual thinking process, not just your request, the quality jumps dramatically.

I've found two approaches that completely change how you work with AI and the quality of results you get:

1. Few-shot prompting

Instead of just describing what you want, show the AI examples of your desired output. This is like training a new employee by showing them completed examples of the work.

❌ Before: "Suggest email subject lines for our webinar invitation."

✅ After: "Here are examples of email subject lines that achieved 45%+ open rates for webinar invitations.

Generate 5 subject lines for our upcoming webinar on 'Productivity Essentials for Remote Teams' following similar patterns."

Example high-performing subject lines:

3 Customer Retention Strategies You Haven't Tried | Seats Limited

Join us Tuesday: How to cut reporting time in half (+ live Q&A)

Quick question about your marketing automation, [Name]

These conversion mistakes cost us $23K (learn from our fails)

Last chance: Tomorrow's analytics masterclass (50 spots left)"

🧠 Why it works: By providing examples with proven open rates, you're giving the AI patterns that actually work in the real world. The examples show specific techniques (scarcity, time references, personalization, specific benefits, curiosity gaps) that the AI can adapt to your topic.

2. Chain-of-thought prompting

I stumbled onto this next technique while nerding out on an Anthropic podcast (I have this AI tool that pulls key insights from podcasts—game changer for someone who wants to save more time while learning new things). If you'd like to explore more on the podcast, you can read this post written by

.Here's what hit me: when you guide AI through a thinking process instead of just asking for an answer, everything changes. The Anthropic folks call this "chain-of-thought prompting."

It's basically teaching AI to show its work. Instead of saying "what's 28 x 15?" you say "let's multiply step by step." The magic happens because you're mimicking how humans actually think—breaking complex problems into logical steps.

No fancy philosophy needed here. This is purely practical: when AI thinks through problems step-by-step like we do, you get answers that you actually want.

Here are some effective ways you can do it:

Step-by-step reasoning: "Let's approach this problem step-by-step: 1) First, we'll... 2) Next, we'll... 3) Finally, we'll..."

Pros and cons analysis: "Let's consider the pros and cons of this situation: Pros: 1)... 2)... 3)... Cons: 1)... 2)... 3)... Based on these, we can conclude..."

If-then scenarios: "If we assume X, then Y would likely happen because... However, if we assume Z instead, then..."

Analogical reasoning: "This situation is similar to [analogy]. In that case, we would... Therefore, in our current scenario..."

Reverse engineering: "To achieve [goal], we need to work backwards. The final step would be... Before that, we would need to... And to start, we should..."

To help you understand more, let's take an example:

❌ Before: "Is it more cost-effective to subscribe to the premium plan or pay per use for our team of 15 people?"

✅ After: "I need to determine whether the premium plan or pay-per-use model is more cost-effective for our team of 15 people. Think through this step by step:

First, calculate the monthly cost of the premium plan for 15 people

Next, estimate our typical usage based on these patterns: [usage details]

Calculate the pay-per-use cost based on this estimated usage

Compare the two options and identify any non-cost factors to consider

Make a recommendation based on the analysis"

🧠 Why it works: This technique forces the AI to slow down and work through the problem logically, rather than making assumptions. It also makes the reasoning transparent, allowing you to spot any errors in the AI's thinking.

Here's what makes chain-of-thought so powerful: it completely changes your relationship with AI.

Think about it like this: When a junior colleague hands in disappointing work, is it because they're stupid? No—it's usually because nobody taught them how to approach the problem. That's on you as the leader, not them.

Same deal with AI. Garbage thinking in, garbage results out. The quality of AI output directly reflects how clearly you can structure your own thoughts. When you take the time to walk AI through your reasoning process, you're forcing yourself to think better too.

This is exactly why most people don't get good results from AI. The hard truth? Thoughtful prompting takes actual effort. It's easier to throw a vague question at ChatGPT, get a mediocre answer, and then complain that "AI isn't that useful."

Don't be that person whining about AI limitations when you haven't put in the work. Be the one who thinks alongside AI, refines your approach, and gets results that make everyone else wonder what secret prompt you're using.

What most people got wrong with prompt engineering

Let's get real about prompt engineering: it's a skill, not just a hack. And like any skill worth having, you'll suck at it before you get good at it.

The problem? Most people try a couple of prompts, get mediocre results, and decide "this AI stuff is overhyped." Then they go back to doing things the slow way.

I've watched hundreds of people go through this cycle. They make the same predictable mistakes, get frustrated, and quit right before the breakthroughs happen.

Here are the five prompt engineering mistakes I see constantly—and exactly how to fix them so you don't waste time hitting the same walls everyone else does:

1. Being too vague or too specific

❌ The Mistake: Either providing too little guidance ("Write me something about marketing") or micromanaging every detail ("Write exactly 437 words about digital marketing with exactly 7 bullet points and use the word 'strategy' exactly 12 times").

✅ The Fix: Aim for clarity on your goals and key requirements while leaving room for the AI to apply its capabilities. Specify the important elements (audience, purpose, format) but allow flexibility on less critical details.

2. Ignoring the iteration process

❌ The Mistake: Expecting perfect results on the first try and giving up when they don't deliver as you expected.

✅ The Fix: Treat prompt engineering as a conversation, not a one-shot request. Use the AI's initial output to refine your prompt to make incremental improvements with each conversation.

3. Not reviewing AI outputs critically

❌ The Mistake: Blindly trusting everything the AI produces without verification.

✅ The Fix: Always fact-check important information, especially statistics, dates, and specific claims. Remember that AI can confidently hallucinate but deliver false information.

4. Overlooking the negative instructions

❌ The Mistake: Focusing only on what you want and forgetting to specify what you don't want.

✅ The Fix: Include clear instructions about approaches, styles, or content to avoid.

5. Forgetting context for specialized knowledge

❌ The Mistake: Assuming the AI knows your industry jargon, company-specific information, or specialized concepts.

✅ The Fix: Briefly explain or define specialized terminology and provide context for domain-specific tasks.

Where is all this prompt engineering stuff headed?

As AI systems evolve, so will the way we work with them. Each new AI model gets better at understanding what you actually want without all the tricks. But here's what won't change: clear communication will always matter. The people who can articulate exactly what they want—whether to humans or AI—will always have an advantage.

Here's what I'm seeing:

1. From tricks to conversations

Remember when people shared those weirdly specific "magic prompts" that were supposedly perfect? That era is ending. With models improving so quickly, most of those hacks are already unnecessary.

The future isn't about memorizing prompt templates - it's about having meaningful exchanges with AI. The fundamentals of clear communication will always matter, regardless of how smart these systems get.

2. Tools that do the heavy lifting

We're already seeing specialized AI tools that handle prompt engineering behind the scenes - AI coding assistants, writing tools, research agents that are customized for specific tasks.

These tools let you interact through purpose-built interfaces rather than crafting raw prompts every time. But here's the advantage you'll have: understanding what's happening "under the hood" will let you push these tools further than people who just accept whatever the default settings produce.

3. The human element remains crucial

Despite what the hype suggests, we're not headed toward a future where AI does everything while humans sit back. The most successful people will be those who develop effective collaboration patterns with AI.

Think of it like the shift from command-line interfaces to graphical user interfaces - the interaction becomes more intuitive, but knowing the underlying principles still gives you an edge.

Building your prompt engineering muscles now means you'll adapt more quickly to whatever comes next. The mental models you're developing around how to direct AI will transfer to new interaction patterns, even as the specific techniques change.

The bottom line: focus on the principles we've covered rather than chasing every new prompt hack. Clear communication, structured thinking, and collaborative problem-solving will remain valuable no matter how the technology evolves.

🧠 AI Rabbithole

Here's what I found this week on the internet:

OpenAI just launched their own AI Academy - become the AI expert your team desperately needs in just two hours.

Chat is dead? Cove lets you interact with AI visually, not just through text. See how it's transforming workflows in

's exclusive coverage.Shopify CEO's controversial take: 'If an AI agent can do the job, don't bother hiring.' See the full statement that has everyone talking.

The AI coding tools war just heated up: Google's Firebase Studio enters the ring against Replit, Cursor and others. Learn why they're betting big on Gemini as their secret weapon.

A Pew Research Center study revealed: While 76% of experts believe AI will benefit them personally, only 24% of the public shares this optimism. This gap is wild! And this is exactly why I started this newsletter.

Those tips are very similar to the way one has to behave when approaching an oracle as Yi Jing

The distinction between vague and specific prompts couldn't be more true! I've experimented extensively with prompt engineering and found the exact same pattern - the quality gap between generic requests and structured prompts is massive. The framework shared here (Context, Clarity, Constraints, Character, Criteria) mirrors what I've seen work consistently in real-world applications.

What's particularly valuable is the emphasis on iteration and collaboration with AI rather than treating it as a vending machine. When I started approaching AI as a thinking partner rather than just an output generator, my results improved dramatically. I recently explored how this collaborative approach applies to building practical solutions in my latest write-up: https://thoughts.jock.pl/p/csv-column-stripper-affordable-ecommerce-data-solution