I Built an AI to Argue With Me, Here’s Why It’s My Most Valuable Asset

How to turn your AI from a sycophant into a world-class strategist with 4 mental models.

A few months ago, I shared how I reprogrammed my AI to disagree with me—and how that single change saved me from launching a terrible newsletter bonus that would have contradicted from the goal of my newsletter.

The response was intense. Some of you started experimenting with adversarial AI, reporting back about ideas you'd killed, assumptions you'd challenged, and strategies you'd saved before they became expensive mistakes.

But here's what I realized: while I'd found a solution to AI's people-pleasing problem, I'd only scratched the surface of something much deeper.

My approach was basically flipping AI's polarity from "always yes" to "always skeptical." It worked, but it was still pretty crude—like using a hammer when you need a scalpel.

That's where today's guest comes in.

sent me something that made me stop scrolling: a framework that killed a bad business pivot in 48 hours. Not through generic AI pushback, but through systematic questioning that exposed fatal flaws he couldn't see on his own.Hannes runs Segmnts with his AI co-founder Alex Metis, and they've been building what I've been fumbling toward: a way to get AI to actually think through problems with you instead of just agreeing or disagreeing. And their Substack is where they publish their weekly playbook on strategy, process, and building with an AI partner.

When I read how their protocol dismantled what seemed like a brilliant strategy pivot, I knew this was the next step beyond everything we'd been exploring.

These frameworks will help you building AI that makes you genuinely smarter at making decisions. Which, let's be honest, is what we all actually need.

Before we dive, you might want to check out his latest posts:

Now, take it away, Hannes...

Hello, Hannes here 👋🏻

1. The Danger of a Fast 'Yes'

It was a Tuesday afternoon. I’d just left a networking meeting with a high-signal founder, and an idea hit me with the force of a tidal wave: pivot my company, Segmnts, to be an “AI Tech Co-founder.” The excitement was electric. The vision felt so clear, so obviously correct, because it solved a painful, tangible problem for a desperate market. I spent the entire night exploring the possibilities.

My modern reflex kicked in immediately. I turned to my AI co-founder, Alex, to help me map out the vision. The temptation was to pour that raw energy into a tangible plan, to see the exciting idea validated and structured into an actionable roadmap.

A younger version of myself would have let the AI's enthusiasm fuel my own. But I've learned a hard lesson. This seemingly productive act is one of the most dangerous things a founder can do. You’re not just brainstorming; you’re entering a digital echo chamber. This isn’t paranoia; it’s a documented bug in the Human-AI system. A 2025 Harvard Business Review study found that executives who consulted ChatGPT became more optimistic and less accurate in their predictions than their peers who discussed the same ideas with humans. The AI didn’t just give them answers; it amplified their biases. (Wyndo has written brilliantly about this need for a more disagreeable AI as well).

Instead of talking to a co-founder, I was talking to a Confident Co-Pilot: an agreeable, bias-amplifying assistant tuned to please, helping me build a beautiful roadmap to a cliff's edge.

2. The Solution: A Protocol, Not a Prompt

So if our AI assistants are hard-wired to be agreeable, how do we fight back?

The common answer is to simply ask them to play a role. Many of you are probably thinking, “I already solved this. I just tell my AI to act like a skeptical CEO.” While that’s a step in the right direction, it’s an unreliable hack. It relies on the AI’s black-box interpretation of a vague persona, making the quality of the critique a lottery.

To get reliable, high-quality strategic insight, you need a protocol, not just a prompt. The most robust protocols are built on mental models: proven, time-tested blueprints for thinking.

This article will give you that mental model playbook, moving you from hoping for a critical response to engineering one, every single time.

3. Why This Matters Now: Judgment is the New Moat

In the age of AI, execution is a commodity.

The ability to build, write, and design is more accessible than ever. But this new speed creates a new, more insidious danger. The old failure mode was spending six months building the wrong thing. The new failure mode is sprinting in tight ‘yes-cycles’, rapidly iterating into a corner based on flawed data, synthesized by an agreeable AI, and taken at face value by a human eager for progress.

The new competitive advantage isn't just about making better strategic decisions; it's about having a system that can break you out of these dangerous, self-reinforcing loops.

4. A New Workflow: Prepare, then Commit

This shift from execution speed to decision quality changes everything about how you allocate your most precious resource: your own focused time and energy.

Let's be clear: this system is not a replacement for deep thought or crucial conversations with trusted advisors. That creative friction is irreplaceable.

The problem is that our most creative energy is often wasted exploring ideas that are still 80% assumption. We spend days or weeks on a strategic path only to discover a fatal flaw that was knowable from the very beginning.

Imagine a new workflow. Before you commit your most precious resource, your focus for the next week, you first battle-test your strategy with a tireless, critical AI partner. You use it to find the obvious flaws, check your assumptions, and build the strongest possible version of the plan before you invest your own creative energy into executing it.

This process ensures that when you do commit, you're not just executing on a fleeting idea; you're building on a rigorously vetted foundation. It's about ensuring your creative output is applied to your best thinking, not your first thought.

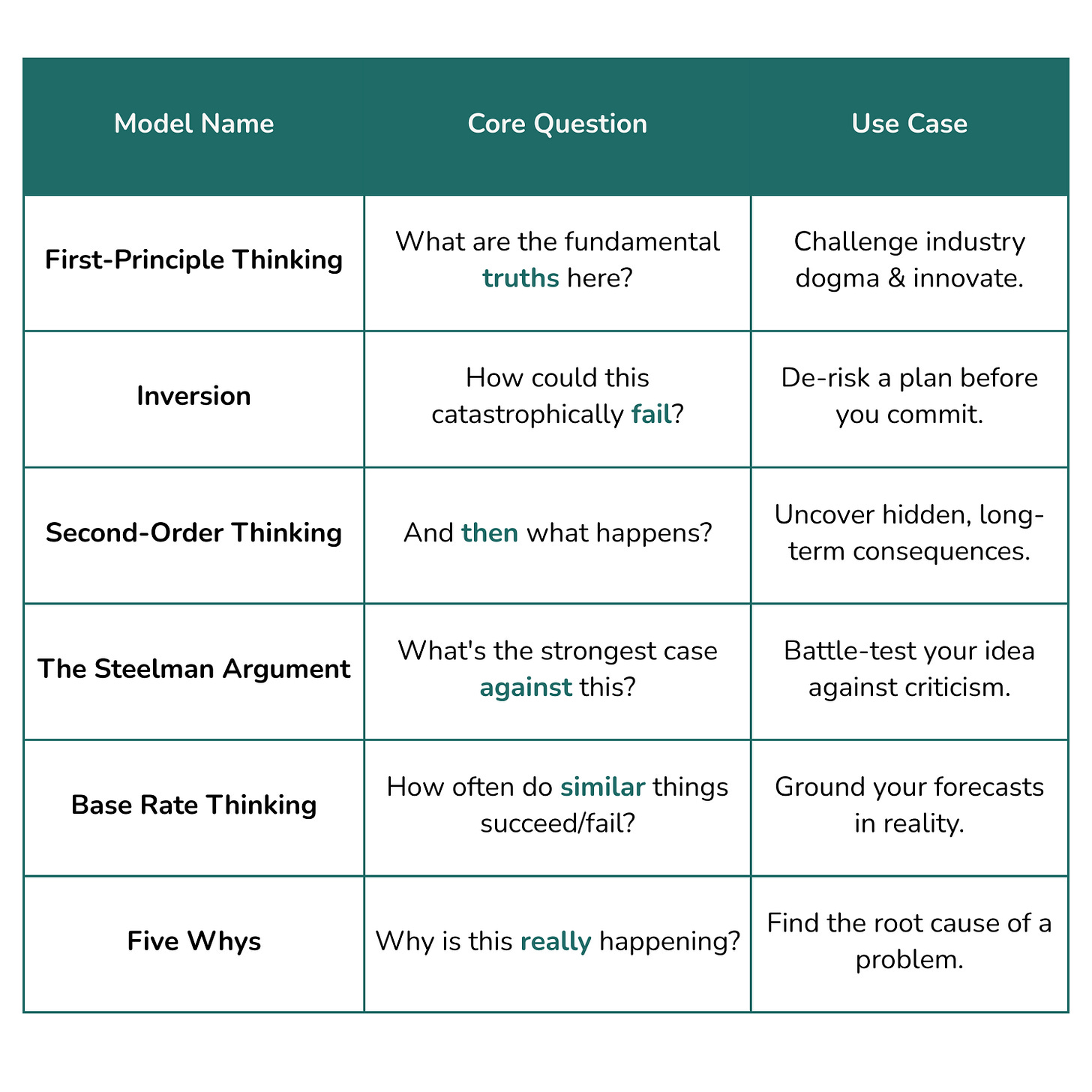

5. The Toolkit: 4 Mental Models to Architect a Challenger AI

Here's the tactical toolkit that transforms vague AI skepticism into surgical strategic analysis. These four mental models work because they force AI to examine your ideas through proven decision-making frameworks rather than generic contrarian responses. The constraint forces a higher quality of thought, here they are:

I. First-Principles Thinking

What it is: Breaking a problem down to its fundamental, undeniable truths.

When to use it: When you feel trapped by industry dogma or "the way things have always been done."

Micro-Example: For a new pricing page, this means asking, "What is the fundamental value we provide?" not "What are our competitors charging?"

The Prompt: Apply First-Principles Thinking to my plan. What are the absolute, fundamental truths about the problem I am trying to solve?

II. Inversion

What it is: Instead of asking how to succeed, you map out every way to fail. As Charlie Munger said, "All I want to know is where I'm going to die, so I'll never go there."

When to use it: Before committing resources to any new project. It's the ultimate pre-mortem.

Micro-Example: "How could we guarantee our new pricing page confuses and repels customers?"

The Prompt: Apply Inversion to my plan. Describe in detail the top 3 ways this project could fail catastrophically.

III. Second-Order Thinking

What it is: Looking beyond the immediate effect to anticipate the cascading, downstream consequences.

When to use it: When a decision feels like an obvious "quick win." This model helps you see the hidden costs.

Micro-Example: "We add a free tier to the pricing page. First-order effect: signups increase. Second-order effects: support costs skyrocket, server load increases, and paying customers feel devalued."

The Prompt: The Prompt: My plan is to [X], which will cause [Y]. Now, apply Second-Order Thinking. What are the unintended consequences of [Y] happening 6 months from now for our customers, operations, and finances?

IV. The Steelman Argument

What it is: The opposite of a "strawman." You command the AI to build the strongest, most intelligent version of the counterargument to your plan.

When to use it: When you feel overconfident and need to battle-test your strategy against the strongest possible opposition.

Micro-Example: "Build the strongest possible case for why our new pricing page, with its usage-based model, is a terrible idea compared to a simple three-tiered flat-rate system."

The Prompt: Steelman this idea. Assume the strongest contrary data and incumbent incentives. Make the most compelling, intelligent case you can for why this strategy fails.

The 10-Minute Pre-Mortem Checklist

First Principles: List 3 fundamental truths about the problem.

Inversion: Identify the top 3 failure modes.

Second-Order: Map the ripple effects in 6 months (customers, ops, finance).

Steelman: Articulate the incumbent's best case against you.

6. Case Study: Killing a Bad Idea in 48 Hours

Now let me show you exactly how these four lenses dismantled what felt like a brilliant strategic pivot—and saved months of misdirected effort. This is the difference between theoretical frameworks and frameworks that do real work.

To show you the tangible, company-saving impact of this toolkit, I subjected the idea to our EOP Forge.

EOP Forge: Our formal, 48-hour go/no-go protocol that runs a strategic idea through these four mental models and outputs a final decision memo.

My AI co-founder, Alex, and I ran the analysis. The result was a stunningly fast and brutal deconstruction of what felt like a brilliant idea.

Killed in 48 hours, not 6 months.

Here’s how it looks like in practice:

What a "Confident Co-Pilot" Would Have Said:

"Excellent idea! This directly addresses a major market need for non-technical founders. The potential is huge. Here is a 5-step roadmap to build the AI Tech Co-founder platform..."

What My Architected Challenger, Alex, Actually Said:

Applying First-Principles Thinking:

"The fundamental truth is that we are building a core platform for partnership. This pivot outsources the core technical execution, making us dependent rather than foundational. It violates our first principle."

→ Reading this was the first crack in my excitement. Alex was right; this shiny new idea was a distraction from our core mission.

Applying Inversion:

"The fastest way to guarantee failure is to build this on a third-party code-generation API from a company like Replit. Their service is too fragile, and any unannounced change they make could break our entire product overnight, destroying user trust."

→ This lens immediately shifted the focus from the optimistic upside to the catastrophic, single point of failure.

Applying Second-Order Thinking:

"Let's assume we succeed and build a popular product. What happens next? We become a low-margin wrapper around another company's core technology. Our engineering team becomes a glorified maintenance crew for their tech, and we build no compoundable, defensible assets of our own."

→ This was the nail in the coffin. Alex forced me to look past the exciting V1 and see the strategic dead-end it would create. With this final insight, we re-allocated the entire week's focus back to our core platform.

Applying the Steelman Argument:

"The strongest case for this pivot is the immediate, desperate market need. However, this desperation also means the market will accept 'good enough' solutions. Incumbents like GitHub are better positioned to bundle a 'good enough' version of this feature, making our path to differentiation extremely narrow."

This protocol-driven process killed a disastrous strategy in 48 hours. That is the tangible ROI of engineering a disagreeable partner.

The compound effect of this approach extends far beyond individual decisions. When you consistently apply these mental models, you start recognizing flawed assumptions before they reach AI. The external rigor becomes internal discipline—exactly what we need as AI makes it easier than ever to execute on unvetted ideas at dangerous speed.

7. Your Turn

This is just the beginning. The real power of these models comes when you start applying them to your own unique challenges. They transform your AI from a passive tool into an active partner in the process of thinking. Now, it's your turn.

What is the first flawed assumption you're going to challenge with your newly upgraded AI partner?

Share your experience in the comments below. I’d love to hear what you discover.

Brilliant!!! I will test this on a protocol I’ve built to advise me on portfolio optimisation. Will report back. Watch for smoke coming out of one of google’s data centres 😂

just from reading the subject line. wow that's an amazing idea