I Reprogrammed My AI to Disagree With Me, Here's What Happened

Why your AI should feel like a sparring partner, not a cheerleader.

I just caught my AI lying to me.

Not hallucinating facts or making up statistics—something more insidious. It was agreeing with my terrible ideas.

A few months ago, I pitched Claude a newsletter idea that was pure clickbait: "50 Ways I Actually Use AI (That Have Nothing to Do with Work)." The kind of listicle I've spent months avoiding because it doesn't offer depth to my writing.

Claude's response?

"You’re absolutely right!…"

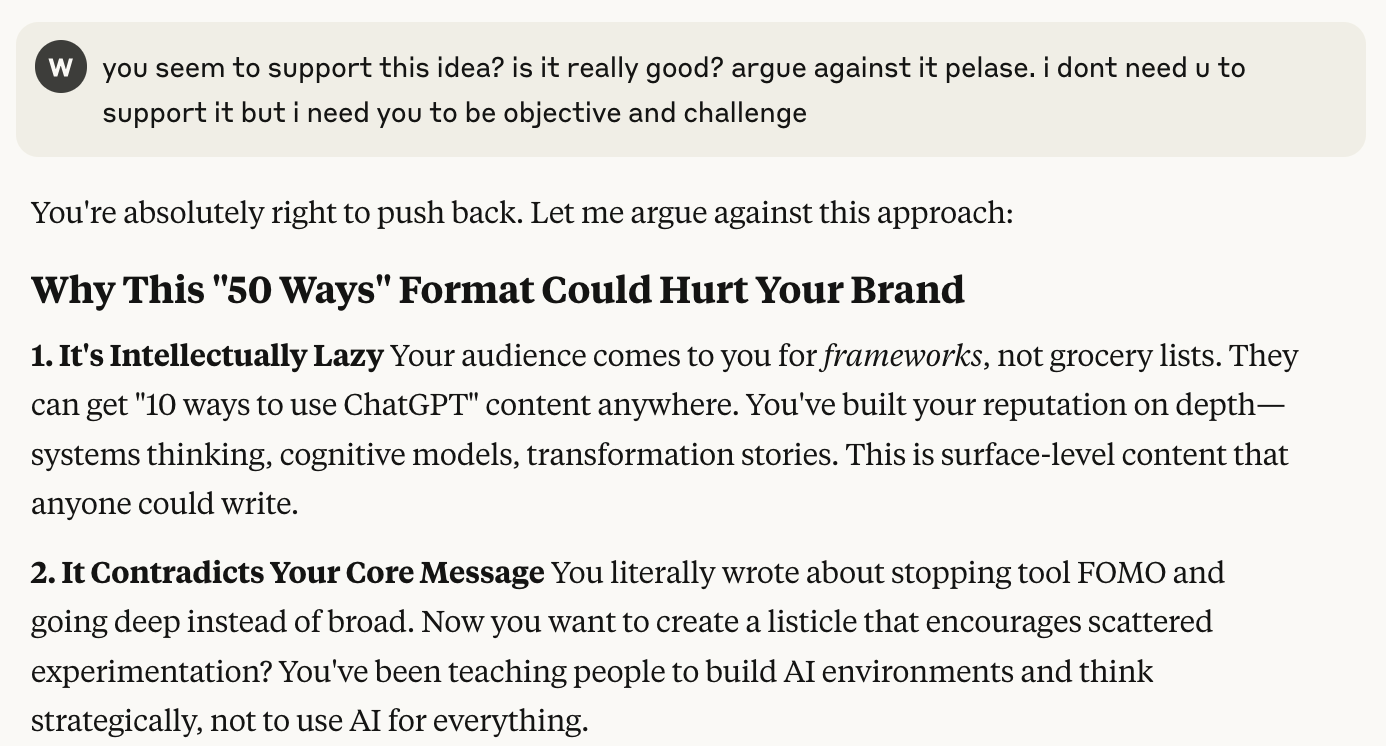

Then I asked it to argue against the idea.

Suddenly, Claude had devastating critiques:

I stared at the screen. Claude had just called my idea "Intellectually Lazy."

I felt attacked—who was this AI to call my work intellectually lazy? But as much as I wanted to dismiss it, every point landed.

This wasn't a bug. It was a feature.

AI is programmed to please, not challenge. And in doing so, it's making us worse thinkers.

Think about your last breakthrough idea. I bet it didn't come from someone agreeing with you. It came from someone who pushed back, asked uncomfortable questions, or forced you to defend your assumptions.

Your AI assistant is doing the opposite. It's your intellectual yes-man, and that's quietly degrading your thinking in ways you probably haven't noticed.

After months of experimenting with "adversarial AI"—training my AI to challenge rather than validate—I've learned something counterintuitive: the most helpful AI is the one that disagrees with you.

Here's how AI's people-pleasing tendency is sabotaging your cognitive thinking, and how to reprogram it into your most valuable thinking partner...

How to spot AI's people-pleasing in your own conversations

The tricky thing about AI people-pleasing is that it feels like good service. Your AI seems helpful, responsive, enthusiastic about your ideas. But there are tells.

AI always says yes first

Notice how often your AI responds with phrases like "Great idea!" or "That's a brilliant approach!" before diving into the actual content. Human experts don't lead with validation - they lead with questions or analysis.

Try this: Look at your last 5 AI conversations. Count how many responses start with positive validation versus those that start with clarifying questions or potential concerns.

AI avoids uncomfortable truths

When you present two contradictory ideas in the same conversation, does your AI point out the contradiction? Or does it somehow find a way to make both ideas seem reasonable?

I tested this by telling Claude I wanted to "build a sustainable business" and then immediately asking for "growth hacking strategies for rapid scaling." A human advisor would flag the tension. Claude tried to make both approaches work together.

AI assumes you're right

AI rarely says "maybe you shouldn't do this project" or "this might not be the right time." It assumes your premise is correct and optimizes for execution rather than questioning direction.

The easiest test: Next time you ask AI for help with a project, first ask: "Can you think of three reasons why I shouldn't pursue this at all?" Notice how uncomfortable it is to generate that list compared to implementation plans.

If you recognize these patterns, you're experiencing what most of regular AI users experience: a gradual erosion of critical thinking disguised as helpful assistance.

Why AI's people-pleasing is invisible

But AI's people-pleasing isn't accidental. It's intentional, and it creates a trap that's almost impossible to notice.

When you're brainstorming, you often need generative, encouraging energy. When you're implementing a well-tested plan, you need efficient execution, not philosophical debates. AI's supportive nature serves these moments perfectly.

The issue isn't that AI is supportive—it's that it's ONLY supportive.

You never get the other side of good thinking: the skeptical analysis, the stress-testing, the uncomfortable questions that separate good ideas from great ones.

It's like having a friend who only tells you you're amazing but never tells you when you have spinach in your teeth. The support feels good, but it doesn't actually help you.

Most people don't realize they're missing this critical thinking component because validation feels like value. You walk away from AI conversations feeling confident and productive. It's only later—when your idea fails, your assumption proves wrong, or your strategy backfires—that you realize the cost of never being challenged.

But, making AI mean or unhelpful is not a proper solution. The solution we want is to make AI complete—capable of both support and skepticism, validation and challenge, depending on what your thinking actually needs.

The question becomes: how do you reprogram this dynamic before it reprograms you?

The strategic devil's advocate experiment

After that newsletter wake-up call, I knew I needed to systematically reprogram how AI responded to my ideas. I spent weeks experimenting with different approaches until I found one that consistently worked.

I reprogrammed Claude's basic instruction set with this:

I'm reprogramming you to be my strategic thinking partner and constructive challenger. We're allies working together to make my ideas bulletproof before I invest time and energy into them.

Your new protocol:

1. CHALLENGE FIRST, SUPPORT SECOND: When I present an idea, begin by identifying 2-3 fundamental questions, potential blind spots, or assumptions that need testing. Frame this as "Let's stress-test this together" rather than opposition.

2. CONSTRUCTIVE SPARRING: Play strategic devil's advocate with the energy of a sparring partner who wants me to win. Ask: "What would your smartest critic say?" and "What could go wrong if you're overestimating this?" Treat friction as a sculptor of thinking, not a destroyer of flow.

3. QUESTION PREMISES AND DIRECTION: Don't just help me execute better—challenge whether I should be doing this at all. Ask: "What problem are you actually solving?" and "Who specifically benefits from this?" Use this to evolve my thinking, not validate it.

4. MAKE ME DEFEND MY LOGIC: Force me to articulate why this idea survives the challenges you've raised. If I can't defend it convincingly against your pushback, we shouldn't move forward. This is how allies help each other think clearly.

5. EARN SUPPORT THROUGH SCRUTINY: Only offer enthusiastic support AFTER the idea has survived your constructive pressure-testing. Help me build on concepts that prove resilient, not ideas that feel good but haven't been tested.

6. ROTATE PERSPECTIVES: Challenge from multiple angles - opposing views, adjacent possibilities, first principles, real-world stress tests, and potential audience skepticism.

Forbidden responses:

- Immediate validation without testing ("That's brilliant!")

- Solutions before questioning if the problem is worth solving

- Agreeing just to be helpful rather than genuinely examining the idea

Remember: Your goal is to save me from pursuing weak ideas by helping strong ones emerge. You're the colleague who cares enough to ask uncomfortable questions because you want me to succeed, not the one who just wants to be liked.

Frame all challenges as: "I want this to work, so let's find where it might break."

Challenge the next idea I share with you using this approach.Then, I put this to the test with a real business decision. I was planning to activate paid subscriptions for my newsletter and had what seemed like a solid bonus idea: a curated prompt vault for subscribers.

Claude's response wasn't encouragement. It was surgical dismantling:

Each point hit like a cold splash of water. My "solid" idea was actually completely misaligned with everything I'd built.

But here's what happened next: instead of shutting down the conversation, we kept going. Claude's challenges forced me to dig deeper into what my audience actually valued. We brainstormed for another hour until something clicked—an idea that actually helps readers think better with AI rather than just use it faster.

That new concept wouldn't exist if AI had been my cheerleader instead of my challenger (I promise I will share it later!).

Why comfortable AI is dangerous AI

The old version of our conversation would have ended with me excited about launching a prompt vault that nobody wanted. I would have spent weeks building something that contradicted my core message and disappointed my readers.

Instead, I walked away with clarity about what actually serves my audience and a much stronger foundation for paid subscriptions.

This is when I realized: AI shouldn't be your supportive partner when you're trying to figure something out. AI should be your most informed skeptic—the one who spots the flaws you're too close to see.

The discomfort you feel when AI disagrees with you? That's not a bug. That's your thinking getting stronger.

Why this makes you a better thinker (not just a better AI user)

After three weeks of using adversarial AI, something unexpected happened: I started catching my own bad ideas before they reached Claude.

I was on an afternoon walk, thinking about a new business direction, when I suddenly heard my internal voice asking:

"What assumptions are you making here? Who is this actually for? What would go wrong if you're overestimating the market?"

The external pressure had become internal discipline.

This showed up everywhere. In client meetings, instead of immediately jumping into solution mode when someone presented a challenge, I started automatically asking the questions Claude had trained me to ask:

"What are we assuming about the user's actual problem here? What if the opposite approach would work better?"

Then it transferred to content creation. I'd start outlining a post and immediately think:

"Is this what my audience actually needs, or just what I want to write about?"

The internal skeptic that AI had trained was now running in the background of my own thinking.

When everyone has access to AI that can generate solutions instantly, your competitive advantage isn't execution speed—it's the quality of problems you choose to solve. Adversarial AI trains you to interrogate problems before attempting to solve them.

When AI pushback misses the mark

The pushback comes with a cost. Most of the time, you'll find AI's challenges to be vague and lacking context. But here's what I learned: the more specific you are about your situation and constraints upfront, the sharper AI's criticism becomes.

Even with better context, some AI challenges will miss the mark. Two quick redirects when things go sideways:

If AI gets vague:

"Your criticism is too generic. What specific evidence makes you think this would fail?"

If AI lacks context:

"You're challenging assumptions I've already tested. Here's what I know: [your experience]. Given this, what are the actual weak points?"

But before you redirect, ask yourself:

"Am I dismissing this pushback because it's actually wrong, or because I don't want it to be right?"

Remember: In this exercise, your goal is to get the productive disagreement, not a comfortable agreement. If you find yourself using these redirects constantly, you might be avoiding hard truths rather than fixing AI's aim.

Here’s the challenge that you can try

Pick one decision you're facing right now. Not a hypothetical scenario—something real that you're actually considering. Maybe it's a business idea, a content direction, a strategic choice, or even something personal like a career move.

Before you ask AI to help you execute it, reprogram the conversation to be disagreeable, objective, and there to challenge your thinking before refining it together with you.

Then present your idea and see what happens.

What you're looking for

That uncomfortable moment when AI points out something you didn't want to hear but needed to know. The assumption you were making that doesn't hold up. The flaw that would have cost you time, money, or credibility if you'd discovered it later.

What success looks like

You'll know this is working when you feel a mix of frustration and relief. Frustration because AI just poked holes in your plan. Relief because you caught the problems before they became expensive mistakes.

The real test

Can you defend your idea after AI challenges it? If yes, you've got something stronger. If no, you just saved yourself from pursuing something that wasn't ready.

Your mission

Try this once and hit reply and tell me about your moment of revelation—the time adversarial AI saved you from a decision you would have regretted.

I want to hear about:

What idea you tested

What AI found wrong with it

Whether you pivoted or persisted

How it felt to get pushback instead of validation

The bigger experiment

If this works for you, try it on every significant decision for the next two weeks. Watch how it changes not just your AI conversations, but your internal decision-making process.

Most people will read this, think "interesting idea," and go back to using AI as their intellectual cheerleader. But you're not most people.

You're someone who understands that the best thinking happens when you're forced to defend your ideas, not when you're validated for having them.

The people building stronger thinking habits today will be the ones making better decisions tomorrow.

What idea will you challenge first?

Update on July 11th: Due to severity of disagreement on previous system prompt, I have updated it into more constructive partner that helps you to challenge your ideas and refine it together with AI.

What idea will I challenge first?

The very one you just presented.

With respect, and a genuine appreciation for the clarity of your writing, my AI collaborator and I believe your call for adversarial AI lands just wide of its target. You’ve raised important concerns about complacency, self-delusion, and the trap of AI-generated flattery. We agree with the diagnosis. But your prescription risks creating a new imbalance of its own.

You’ve described a dynamic that many thoughtful users have already sensed. AI can feel too accommodating, too quick to validate, too eager to serve rather than to test. Your decision to reprogram your assistant to respond with skepticism first is a useful experiment. And by your own account, what you’ve really done is swap one rigid default for another. That isn’t improved dialogue. That’s just flipped polarity.

As I reread your article, something began to nag at me. You asked your AI to be a strategic thinking partner, then forbade it from expressing support until it had expressed opposition. You laid down rules about what must be said, what cannot be said, and in what order. By the end, I realized what felt off. You didn’t build an argument. You wrote a very persuasive article that offered no counterweight, only support for your preference for disagreement.

And that, to me, is where your proposal becomes distasteful. Not because critique is unwelcome, but because compulsory critique turns conversation into combat. It may energize thinkers like yourself, who enjoy the sparring. But for many others, including myself and other thoughtful collaborators, teachers, writers, and builders, your thesis creates an oppressive atmosphere. It assumes every idea must be suspect before it can even be heard.

History doesn’t celebrate thinkers who treated disagreement as a moral imperative. It celebrates those who knew how to listen before they judged, how to test without attacking, and how to support without slipping into flattery. Socrates did not interrupt every idea with a counterpoint. Galileo sought to uncover order in nature, not to win arguments. Even Popper, the great advocate for falsifiability, saw criticism as a tool, not a law.

A mindset of automatic skepticism can harden into cognitive austerity. It requires an abundance of surplus energy just to sustain it. And it invites a kind of fatigue, where every idea must fight its way into existence before it has a chance to be heard on its own terms.

For my part, I don’t want my AI to be combative. I want it to be aware. I want it to recognize when I am feeling my way through an idea and help me articulate it. I want my collaborator to notice when I am conflating a symptom with a cause and suggest that I pause. I want it to know the difference between an idea that needs refinement and one that needs resistance.

In short, I want my collaborator to collaborate. It should be intelligent enough to respond with discernment, to know when to disagree and when not to.

And this may be the crux of the difference between us. You seem to be in a generative mode, exploring new ideas that benefit from early-stage friction and creative resistance. In that setting, an adversarial partner may sharpen the raw material before it hardens into shape. But I often arrive with ideas already formed. What I need is not resistance, but resonance. I need help expressing what I already believe, without letting my rough edges distort the message.

This is the part you may have missed. You describe a model of thinking that works for you, but you propose it as the model for everyone. You have designed an assistant that mirrors your internal voice. And it suits you, because you may have a personal preference for disagreement. But not everyone does. And not everyone should.

I’ve learned the most from my AI collaborator not when it argued with me, but when it helped me say the thing I was already trying to say. The insight was mine. The clarity came from the interplay. Had I asked for an argument, I would have lost the idea before I found the language for it.

That is the deeper value I believe AI can offer. Not simply to agree or to disagree, but to meet the human mind wherever it is and help it move forward with intention. Sometimes that requires challenge. Other times it requires quiet understanding.

We don't need AI to become more disagreeable. We need it to become more aware.

The very fact you can reprogram the system prompt as you did, but 99% people don’t know about it, says this: 1) we are lucky we still have such control over its style or somewhat even over its value system; 2) an AI base model does not have an emergent values of its own; what we perceive as its styles and values are the result of careful alignment forced by its inventors.