How AI Is Literally Shrinking Our Brains (And What to Do About It)

Learn to flip AI default script and unlock Socratic thinking.

My brain had become a Netflix subscription to AI's thinking—convenient, instant, and completely passive.

The realization hit me while reviewing my ChatGPT conversations. I'd asked the same questions dozens of times:

"How do I structure a compelling argument?"

"What makes content engaging?"

"How do I write better introductions?"

Each time, I got excellent answers.

Each time, I implemented them successfully.

Each time, I felt productive and smart.

But here's what I realized: I'd developed a dependency on going back to the chat instead of internalizing the frameworks.

I was like a student who could ace every test by looking at the answer key, but couldn't solve a single problem from memory. Except in this case, the "test" was my own thinking ability.

Turns out, I wasn't alone. MIT researchers just completed the first brain imaging study of people who regularly use ChatGPT for writing essay, and the results explain exactly why this was happening to me—and probably to you too.

The numbers are brutal:

83.3% of regular users couldn't quote from essays they'd written minutes earlier

Brain connectivity collapsed from 79 neural connections to just 42

That's a 47% reduction in cognitive processing power

If your computer lost half its processing speed, you'd call it broken. That's exactly what's happening to our brains.

But the researchers only measured one type of AI use—passive extraction. They never studied what happens when people use AI as cognitive resistance training.

That's exactly what I discovered when I accidentally stumbled onto what neuroscientists call "meta-learning"—using AI not just to get information, but to develop the cognitive patterns that make you a better thinker, period.

In this post, I'll show you the exact framework I learned to transform every AI interaction into cognitive resistance training. The same approach that helped me build apps I could actually explain, write content I could defend, and solve problems I could replicate without artificial assistance.

The best part? It doesn't require using AI less. It requires using AI as your thinking gym instead of your thinking replacement.

Why smart people fall into the cognitive outsourcing trap

The MIT study reveals something disturbing: people experiencing cognitive decline from AI use don't notice it happening.

Why? Because the trap is designed to feel like progress.

Here's the invisible cycle that catches most AI users:

Encounter a challenge

Ask AI for the solution

Get a great answer instantly

Apply the answer successfully

Feel productive and smart

Encounter the same type of challenge later

Realize you can't solve it without AI

Return to step 2

The reason this feels productive is neurological. When you get quick answers that solve immediate problems, your brain releases dopamine—the same reward chemical as social media scrolling or gambling wins.

You're literally getting addicted to not thinking.

But this gets particularly more dangerous because you feel like you're getting smarter when you're actually getting more dependent.

The effort paradox

Think about the last time you truly mastered something difficult—learning new language, understanding quantum physics, building a business from scratch.

The breakthrough moments didn't come from consuming information faster. They came from struggling with concepts until something clicked. The confusion was necessary. The effort was the education.

AI eliminates the struggle. And in doing so, it eliminates the learning.

This creates what neuroscientists call "desirable difficulties"—the cognitive load that actually strengthens neural pathways. When you work through problems step-by-step, your brain builds new connections. When AI hands you solutions, those connections never form.

The result? You can execute AI's suggestions flawlessly but can't innovate beyond them. You become fluent in AI-assisted tasks while losing fluency in independent thinking.

What meta-learning actually is

But what if we could flip this dynamic entirely?

Instead of using AI to avoid cognitive effort, what if we used AI to create productive cognitive effort?

That's exactly what meta-learning does. It's the difference between asking "What's the answer?" and asking "How do I think about this problem?"

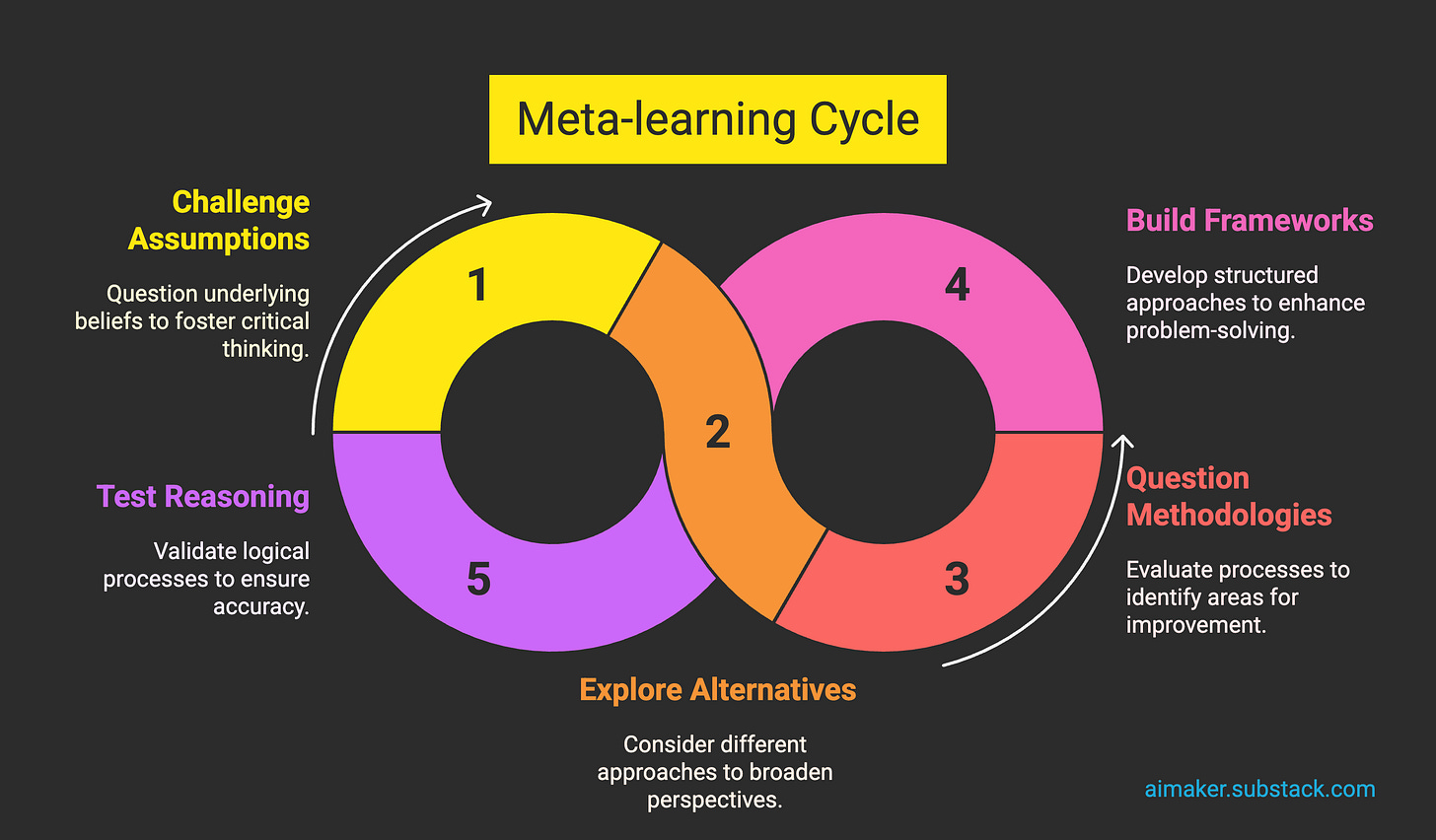

Meta-learning is using AI to build thinking frameworks, not just get answers. Every conversation becomes practice for better reasoning:

Challenge assumptions

Explore alternatives

Question methodologies

Build frameworks

Test reasoning

Remember: The goal is to become someone who asks better questions.

But, to get there, you need to slow down the AI conversations to focus on thinking processes so you can accelerate your cognitive development. The frameworks you build through AI interrogation transfer to every domain of your life—conversations with colleagues, strategic decisions, creative problems.

The Socratic method for the AI age

This approach isn't new—it's ancient. Socrates was history's greatest teacher, and he never gave direct answers. He only asked better questions. He forced students to examine their assumptions, defend their reasoning, and build understanding from the ground up.

We can program AI to behave the same way:

Instead of accepting AI's first response, you interrogate it like Socrates would.

Instead of optimizing for speed, you optimize for understanding.

Instead of extracting answers, you build thinking frameworks.

The difference is transformative.

Normal AI use: "Give me a marketing strategy for my product."

→ Get a comprehensive plan

→ Implement it successfully

→ Learn nothing transferable

Meta-learning approach: "What questions should I be asking about my market that I'm probably not considering?"

→ Uncover hidden assumptions

→ Explore multiple strategic angles

→ Build a framework for strategic thinking that applies to future challenges

The first approach makes you dependent on AI for marketing strategy. The second approach makes you better at strategic thinking.

This is the Three-Deep Protocol I've learned to make meta-learning systematic...

The Three-Deep Protocol for your cognitive training system

The Three-Deep Protocol gives you a systematic method for interrogating any AI response at three progressively deeper levels. Each level builds stronger thinking muscles and prevents the neural atrophy the MIT study documented.

Think of it as resistance training for your brain.

Let's dive in.

Level 1: Question the content

What you're training: Critical evaluation and assumption recognition

After AI gives you any response, probe the substance of what it's telling you:

"Why did you choose this approach over alternatives?"

"What assumptions are you making here?"

"What would happen if the opposite were true?"

Example.

You ask: "How should I price my consulting services?"

AI suggests: "Research competitor pricing, calculate your costs, add 20-30% margin."

Level 1 questioning:

"Why start with competitor pricing instead of value-based pricing?"

"What assumptions are you making about my market position?"

"What if my service isn't directly comparable to competitors?"

The insight: Most pricing advice assumes you're a commodity. But if you're truly differentiated, competitor pricing becomes irrelevant. This questioning revealed I should focus on outcome value, not market rates.

What transfers: The habit of questioning initial assumptions applies to every decision—hiring choices, strategic planning, creative problem-solving.

Level 2: Question the process

What you're training: Systems thinking and methodological reasoning

Now interrogate how AI arrived at its conclusions:

"How did you prioritize these recommendations?"

"What mental model are you using here?"

"What would someone who disagrees with this approach say?"

Continuing the pricing example.

Level 2 questioning:

"How did you decide to prioritize cost-plus pricing over value-based pricing?"

"What mental model assumes clients care more about market rates than outcomes?"

"What would a premium positioning expert say about this approach?"

The insight: AI defaulted to a transactional pricing model because most training data comes from commodity services. But consultants selling transformation should price on value delivered, not time spent.

What transfers: Understanding decision-making processes helps you spot flawed reasoning patterns in meetings, negotiations, and strategic discussions.

Level 3: Question the gaps

What you're training: Strategic thinking and blind spot recognition.

Finally, interrogate what's missing from the entire conversation:

"What am I not asking that I should be?"

"What context do you need to give me a better answer?"

"What would a top expert focus on that we're missing?"

Completing the pricing example.

Level 3 questioning:

"What pricing considerations am I not thinking about that seasoned consultants would focus on?"

"What context about my specific situation would change this advice completely?"

"What would a 20+ years consulting expert in my market think about this?"

AI's response: "I'd need to understand your client acquisition costs, retention rates, project scope variability, and competitive differentiation. Also, different industries have completely different pricing psychologies."

The breakthrough insight: Pricing isn't just about numbers—it's about client psychology, market positioning, and business model design. The real question isn't "What should I charge?" but "How do I structure my business to command premium pricing?"

What transfers: This gap-identification skill helps you ask better questions in every professional context—client discovery, product planning, market research.

How this transforms the AI work you're already doing

Let me show you how the Three-Deep Protocol transforms real work using my own newsletter creation process.

Here's my former approach when I was stuck in:

"Help me structure my newsletter about AI cognitive decline"

→ Get standard problem-agitation-solution outline

→ Write the post following that structure

→ Publish and move on

Meta-learning approach:

Instead of accepting AI's first structural suggestion, I applied the Three-Deep Protocol:

Level 1: "Why did you choose this linear approach over alternatives?"

Level 2: "What assumptions are you making about my audience?"

Level 3: "What if readers already suspect there's a problem but don't know how to name it?"

The breakthrough: This questioning revealed that my readers were likely already experiencing cognitive decline symptoms but hadn't connected them to their AI usage. So instead of convincing them there was a problem, I should start by helping them recognize patterns they were already experiencing.

The result: A completely different article structure that started with self-recognition rather than external research. But more importantly, I developed a framework for audience psychology that now guides every piece I write.

What transferred: The questioning pattern I used for content strategy now improves how I approach client discovery, product positioning, and business strategy. One deep AI conversation built cognitive skills I apply everywhere.

This is why the meta-learning different: instead of getting task-specific answers, you build transferable frameworks. Instead of neural decline, you get cognitive acceleration.

Your meta-learning challenge

The difference between cognitive decline and cognitive acceleration comes down to one simple choice: will you interrogate your next AI conversation or just extract from it?

Here's your immediate test: Pick the AI conversation you're about to have anyway today. Instead of optimizing for speed, optimize for one thing: can you explain the reasoning without looking back at the chat?

The litmus test: If you can't defend AI's approach to a colleague or replicate the thinking process on your own, you just experienced cognitive outsourcing. If you can, you built a transferable framework.

Most people will default to extraction mode within 48 hours.

The question is: are you most people?

Why this matters more than you think

Whether you use AI to improve your thinking or outsource it, here's how you'll know which path you're on:

When someone asks "How did you come up with that solution?" do you confidently explain your reasoning, or do you scramble to remember what ChatGPT told you?

When a client challenges your recommendation, can you defend the methodology behind it, or do you default to "that's what the AI suggested"?

When you encounter a similar problem next week, do you apply a framework you've internalized, or do you start from scratch with another AI prompt?

These moments reveal whether you're building cognitive capabilities or just renting them.

The meta-learning approach doesn't just prevent the cognitive decline—it creates cognitive acceleration that compounds over time. You're not just learning to use AI better. You're learning to think better.

Which AI conversation will you interrogate first? Reply and let me know—I read every response and often feature the best experiments in future newsletters.

The people building cognitive frameworks today will be the ones thinking clearly tomorrow.

Until next week 👋🏻

Wyndo, it’s like we synced brains this week.

While you wrote about meta-learning as cognitive resistance training, I just dropped a piece on using AI to co-build understanding and actually learn how to do the task, not just delegate it.

https://aiblewmymind.substack.com/p/recursive-prompting-the-process-that

Same core idea, different entry points. Great minds, clearly.

Loved the “Netflix subscription to AI’s thinking” line. Painfully accurate.

AI is not shrinking your brain. You're shrinking your brain with the help of AI.

You choose the relationship you have with AI. You set the tone. You can totally craft interactions that push you, that challenge you, that force you to think better, do better, be better. That capability has been given to us - and it's laughably simple to do. Not easy... because we are literally wired to seek the path of least resistance, to find the easiest meal, to do the least dangerous thing. How else would the human race have survived all those centuries of adversity? Keeping your head down and delegating to something/someone else is part of who we are.

You 100% have the power to override your own wiring and reconditioning. That's your choice. You can use AI to do exactly that - which is where the opportunity lies.

If you reject AI because of your choices and your actions, you've just deepened the downward spiraling cycle.

For some reason, I believe in you, though. I know for a fact, you can choose better. But that's your choice to make. I can only sit back and watch what you decide.