I Thought Gemini Was Left Behind. Here Are the 5 Features That Changed My Workflow.

How Google quietly built the AI workflow tools we actually need.

If you've been following AI closely, you know this feeling:

Every new model makes you question everything you thought you knew.

Every release shifts the landscape before you've mastered the last one.

Every announcement has you ready to die on a new hill.

Look at this cycle: Every AI company claims to have "the world's most powerful model." And they're probably right—for about three months. Then the next announcement drops, and we're all supposed to get excited about the new king of the hill.

I've been riding this roller coaster for two years. But if there's one shift that genuinely surprised me, it's Google's comeback story.

A year ago, I wrote off Gemini.

Google felt lost while OpenAI and Anthropic dominated the conversation. I was completely wrong.

A few weeks ago, a reader

shared something that perfectly captures what I've been experiencing:This comment hit me because it reflects exactly what I've discovered: Google didn't just catch up—they built an entirely different approach to how we use AI.

Here's what most people miss: Gemini isn't just another chatbot competing with ChatGPT and Claude. It's actually two interconnected platforms:

Gemini (the familiar chat interface you probably know)

AI Studio (Google's experimental playground that most people have never touched)

What makes AI Studio different?

Think of it as Google's laboratory for multimodal AI interaction. Beyond the features I'll cover today, AI Studio offers capabilities you won't find elsewhere: speech generation that sounds eerily human, advanced video generation using Veo 2 and 3 models, and even the ability to build custom AI applications without coding.

But here's what caught my attention most: while other companies focus on making their models "more powerful," Google built tools that actually integrate with how we already work—your Google Drive, your YouTube viewing, your screen sharing habits.

Together, they offer capabilities that no other AI company provides. After three months of daily experimentation with both platforms, I've identified five game-changing features that work differently than anything OpenAI or Anthropic offers.

And here's the kicker: most of them are completely FREE.

I'm not telling you to switch completely to Gemini, but this post is at least can help you to understand Google's unique approach to solve problems that other AIs simply can't touch.

Let's dive in to Gemini first.

1. Interactive visualization under 2 minutes

If you've been using ChatGPT's Canvas for easy data visualization, you know the reality is messy. You spend 10 minutes crafting prompts, get buggy code, then another 15 minutes debugging errors just to create a basic chart.

I learned this the hard way building visualizations for my research—what should take minutes often became hour-long debugging sessions.

Then I tried Gemini 2.5 Pro's approach, and everything changed.

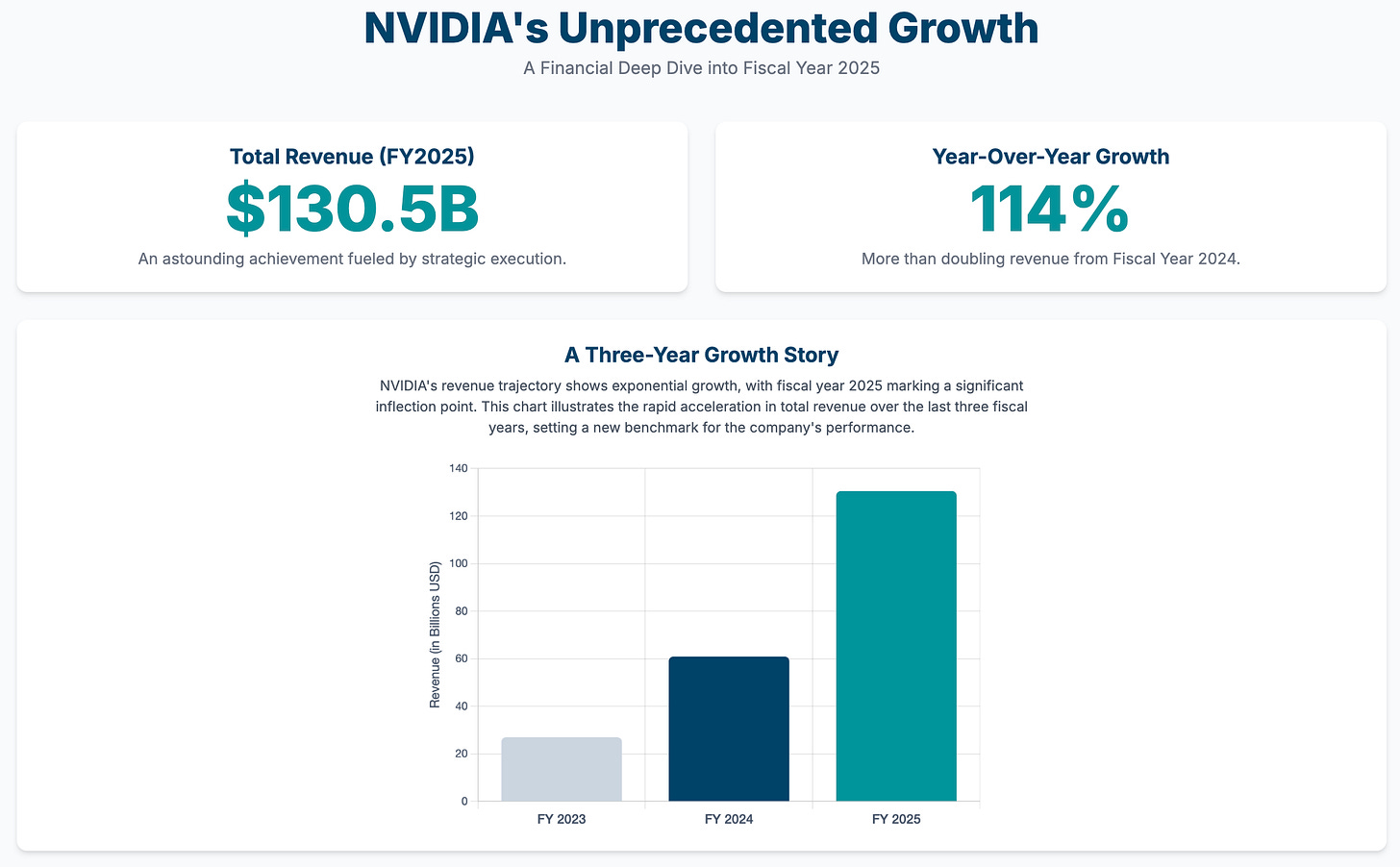

Last week, I needed to analyze Nvidia's financial performance to test this for my podcast with

. I fed the raw data from NotebookLM into Gemini with one simple prompt:"Visualize this data as an interactive infographic."

Two minutes later:

No debugging.

No "can you fix this error" follow-ups.

No complex prompt engineering.

What makes Gemini different here isn't just speed—it's reliability. Gemini seems optimized specifically for creating functional visualizations that work on the first try.

Additionally, you can also feed your Google Sheets data and ask Gemini to visualize it anything. I was surprised by how good it was.

I don't know about you, but with this capability, I feel like I have a data analyst at my fingertips.

Actually, Canvas' capabilities don't stop there, you can also build mini-games that you can play around with. Check out this post by

to explore this more.2. Instant podcasts from any file type

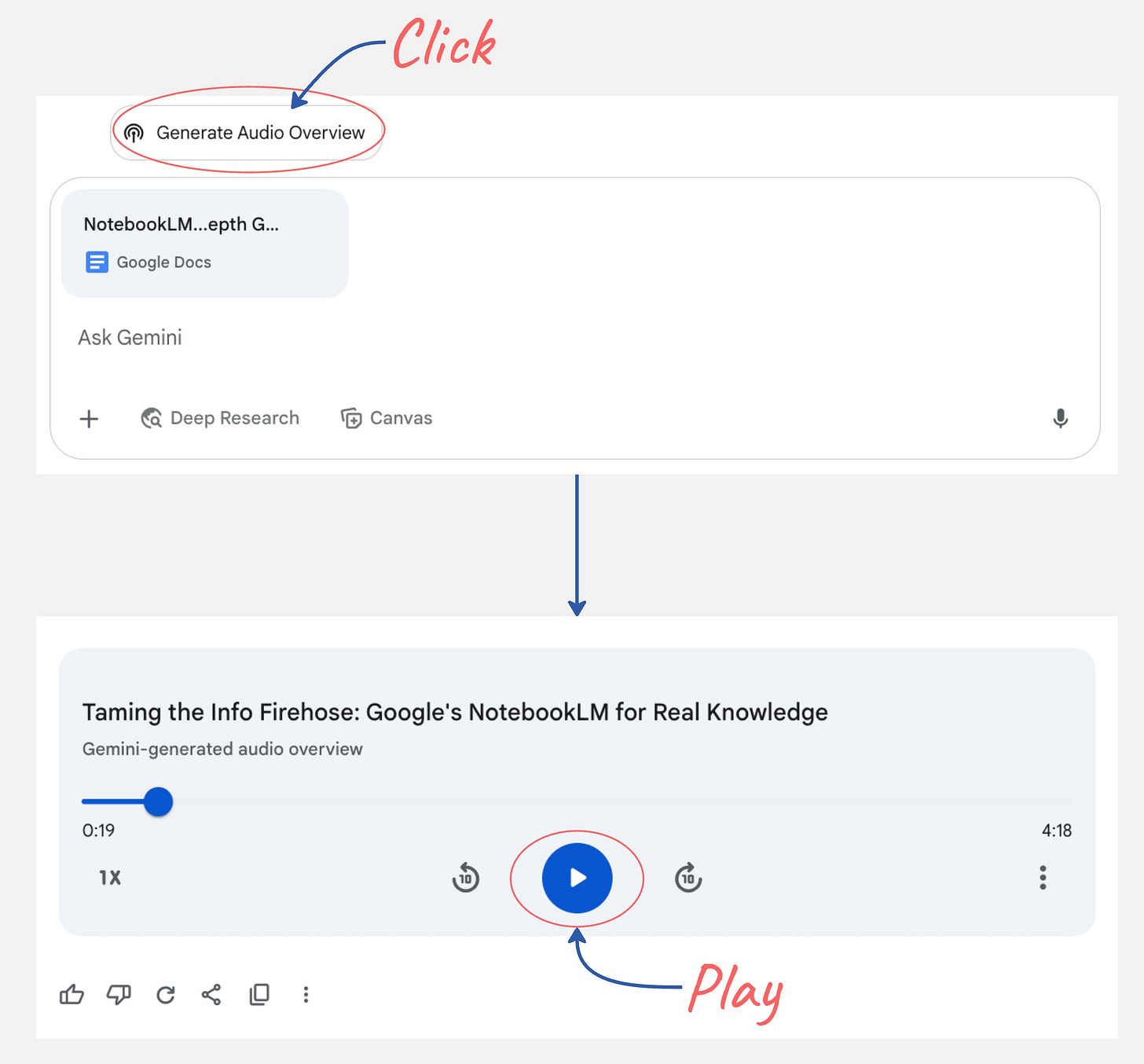

NotebookLM's podcast feature got everyone excited about AI-generated audio content. But here's the limitation: you need to manually upload and organize documents within NotebookLM's interface.

Gemini flips this completely.

Instead of uploading files to a separate platform, Gemini connects directly to your Google Drive and can process virtually any file format—PDFs, spreadsheets, and presentations.

Here's what this looks like in practice:

Currently, I'm creating an in-depth guide how to use NotebookLM to supercharge your research. To help me improve my draft, I turn it into the podcast and listen what does it have to say about my draft. It helps me to find pieces I need to improve to strengthen my argument.

You can also do this by compiling all files and upload them to Gemini then turn it into a podcast. This is a great alternative if you don't want to be drown in the complexity of NotebookLM's user interface.

Within minutes, you can listen to files you have uploaded on the go.

3. YouTube as your personal research assistant

I sometimes avoid hour-long podcasts because taking notes felt like work.

Now I consume them like Twitter threads.

Here's what changed: Gemini can analyze any YouTube video directly from its URL. No downloading, no transcription tools, no third-party apps. Just paste the link and start asking questions.

This is completely different from other AI models. ChatGPT and Claude can't access YouTube directly—you need to find transcripts, copy-paste text, or use workarounds. Gemini eliminates all that friction.

Here's what you can do:

Find an interesting podcast or educational video

Paste the YouTube URL into Gemini

Ask: "What are the 3 most counterintuitive insights from this video?"

For example, I love to summarize podcast and generate actionable social notes like this in less than 15 mins:

This helps me save time to keep up with the news while also helping me to generate social notes to grow my audience.

I'm not saying you have to ditch podcast completely, but you can be more intentional which podcasts you'd like to give your full attention vs. which isn't.

Now, let's explore Google AI Studio.

4. Your AI thinking partner that can actually see what you're working on the screen

Most AI conversations feel like describing a movie to someone over the phone. You explain what you're looking at, they give advice based on incomplete information, and something gets lost in translation.

Google AI Studio's live stream feature eliminates that gap entirely.

I can now share my screen directly with Gemini while talking through problems in real-time. It's like having a brilliant colleague sitting next to me, except this colleague never gets tired of brainstorming and actually pays attention to every detail on my screen.

Here's how this transformed my work:

Last week, I was stuck on the copywriting for my consulting page. Instead of typing long descriptions of my layout and content, I opened AI Studio's live stream, shared my screen, and started talking through my ideas out loud.

"Look at this hero section—does the headline connect with the description below it? What's missing from this message?"

Gemini could see exactly what I was pointing to, analyze the visual hierarchy, copywriting, and suggest specific improvements:

"The headline promises transformation, but your descriptions focus on features. Try connecting each feature to an emotional outcome."

It's not just faster—it's more natural. I think out loud, gesture at elements on screen, and get feedback that actually addresses what I'm working on, not what I managed to describe in text.

This has become my go-to method for working through creative blocks. When I'm stuck, I just start talking to Gemini about whatever's on my screen. The combination of voice input and visual context creates a thinking dynamic I can't get anywhere else.

Pro tip: Use this for any visual work—design feedback, website reviews, presentation planning. The moment you find yourself trying to describe what something looks like in a chat, switch to live stream instead.

5. Context that never forgets + conversations that never get stuck

Here's the productivity killer with most AI tools: you hit the context limit right when the conversation gets interesting.

The numbers tell the story:

While other AIs force you to break complex projects into chunks, AI Studio lets you upload your entire project context—every document, every conversation, every piece of research—and maintain that understanding throughout your work session.

AI Studio solves this with two game-changing features: the massive 1M token context window and conversation branching.

The long context means I can work on complex projects without losing momentum. Currently, I'm working on my AI-powered todo list app for my bolt hackathon. So, I uploaded my entire code on repository to AI Studio. Instead of breaking this into small chunks across multiple chats, everything lived in one conversation.

Then I asked:

"Based on all this context, what are three feature expansions that would increase user engagement while staying true to the app's core mission?"

The response referenced specific code patterns and suggested features that built logically on existing functionality—because it could see the complete picture, not just fragments.

But here's where it gets really powerful: conversation branching.

Midway through planning, I wanted to explore a completely different direction—what if we pivoted to enterprise users instead of individual productivity? Instead of risking my main conversation thread, I created a branch.

Now I had two parallel explorations running simultaneously:

Branch 1: Consumer feature expansion

Branch 2: Enterprise pivot strategy

Each branch maintained full context while exploring different scenarios. No more choosing between following a new idea or protecting existing progress.

This convinces me that AI Studio can become your persistent project partner. Upload everything related to your challenge—documents, videos, audio notes, screenshots—and explore multiple strategic directions without losing any context.

Additionally, Google AI Studio offers multimodal inputs: Google Drive files, YouTube videos, audio recordings, camera captures—all in one conversation that remembers everything. Imagine how much you can achieve using only one chat room.

Pro tip: Use branching for any decision where you want to explore multiple paths. Instead of second-guessing your choices, create branches and compare approaches side by side.

Your Gemini integration strategy

Here's my honest take on when Gemini makes sense:

Use Gemini when you need:

Reliable data visualizations without debugging hell

Quick YouTube video analysis for research

Instant podcast generation from existing files

Use AI Studio when you need:

Collaboration on complex projects

Long-term project memory that persists across sessions

Multiple scenario exploration without losing context

Stick with ChatGPT/Claude when you need:

Complex reasoning and writing

General work assistance

Established workflows that already work

The bottom line

Google isn't just catching up—they're pioneering an entirely different approach to how we use AI.

While competitors obsess over "most powerful" claims, Google solved the real problem: making AI actually integrate with how we work. And, I'm convinced it will just get better!

Their multimodal capabilities and ecosystem integration reveal something others are missing—the future is about seamless collaboration.

You don't need to abandon ChatGPT or Claude tomorrow. But ignoring these unique capabilities means missing tools that could transform workflows other AIs simply can't touch.

Your next 30 minutes

Pick one Gemini feature that addresses a current frustration in your work. Try it with a real project, not a test case. The goal is to expand your AI toolkit with capabilities that simply don't exist elsewhere.

Which feature will you try first?

Reply and let me know—I read every response and often use them to shape future content.

The AI landscape keeps shifting, but the winners aren't the ones chasing every new model. They're the ones who thoughtfully integrate the right capabilities for their specific needs.

Until next week,

Wyndo

Wow, I haven’t used Gemini for a while, and it looks like there are a lot of awesome new features here. I really like conversation branching, this line, “No more choosing between following a new idea or protecting existing progress,” really describes how I feel when I’m deep in a long conversation and need to try multiple approaches.

By the way, with these features, it seems like Google is focusing on developing their AI in the direction of learning and research. I think this is a pretty good approach to compete with other tools.

I found this extremely insightful, thanks for sharing!